Ethics, AI Tools, and Policies. How is Your Philanthropy Using AI?

Community IT was thrilled to welcome two respected leaders, Sarah Di Troia from Project Evident and Jean Westrick from Technology Association of Grantmakers, who shared their informed perspective on AI in philanthropy to share this AI Framework with our audience. You will not want to miss this discussion.

View Video

Listen to Podcast

Part 1 of this podcast introduced basic AI concepts and definitions and gave some practical examples of nonprofits very intentionally using AI to further their mission. It ended with results of a poll of the audience members and discussion on how nonprofits are using AI tools – individually, organization-wide, and/or to perform their mission.

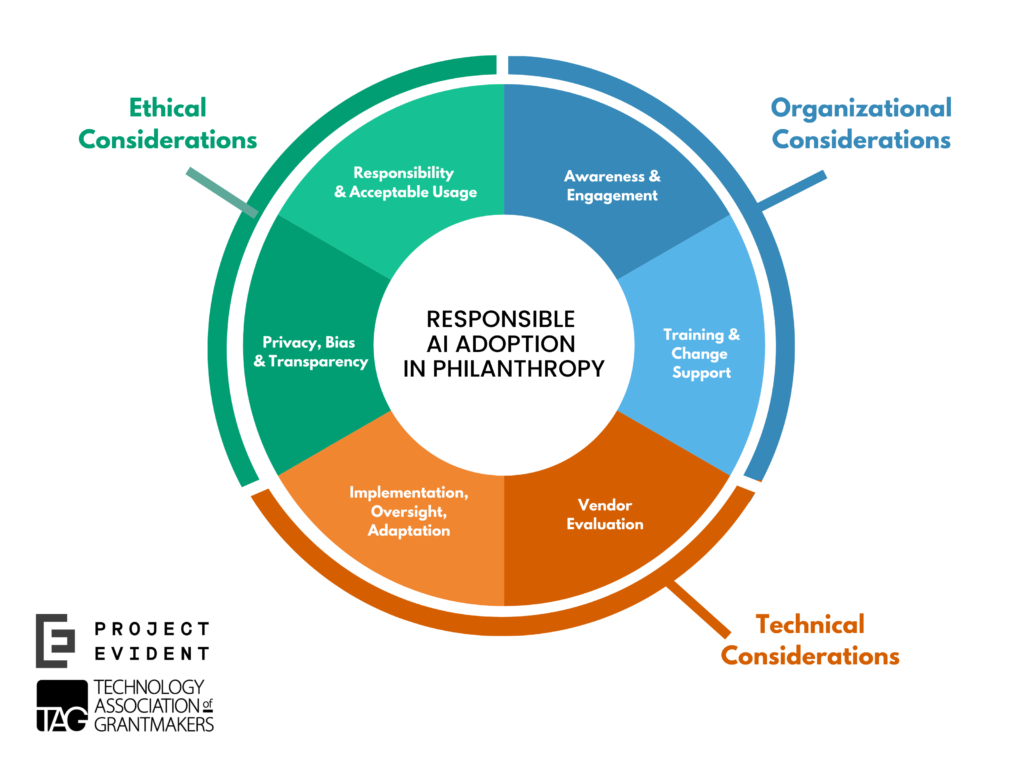

In Part 2, Sarah and Jean delve deeper into the AI Framework they have developed, to walk the audience through organizational, ethical, and technical considerations, and discuss the policies that ideally your nonprofit or foundation will put in place to govern your use of AI tools.

Like podcasts? Find our full archive here or anywhere you listen to podcasts: search Community IT Innovators Nonprofit Technology Topics on Apple, Google, Stitcher, Pandora, and more. Or ask your smart speaker.

As society grapples with the increasing prevalence of AI tools, the “Responsible AI Adoption in Philanthropy” guide from Project Evident and the Technology Association of Grantmakers (TAG) provides pragmatic guidance and a holistic evaluation framework for grant makers to adopt AI in alignment with their core values.

The framework emphasizes the responsibility of philanthropic organizations to ensure that the usage of AI enables human flourishing, minimizes risk, and maximizes benefit. The easy-to-follow framework includes considerations in three key areas – Organizational, Ethical, and Technical.

Sarah Di Troia from Project Evident and Jean Westrick from TAG walked us through the research and thought behind the framework. At the end of the hour they answered a moderated Q&A discussion of the role of nonprofits in the evolution of AI tools, and how your nonprofit can use the Framework to guide your own implementation and thinking about AI at your organization.

As with all our webinars, this presentation is appropriate for an audience of varied IT experience.

Community IT is proudly vendor-agnostic and our webinars cover a range of topics and discussions. Webinars are never a sales pitch, always a way to share our knowledge with our community.

We hope that you can use this Nonprofit AI Framework at your organization.

Presenters:

Sarah Di Troia, Senior Strategic Advisor for Product Innovation at Project Evident, has spent thirty years in the social sector as a nonprofit leader, philanthropic funder, and consultant. As the Senior Advisor on Product Innovation for Project Evident, Sarah has immersed herself in AI for equitable outcomes, leading AI research, a cohort of AI pilots, AI webinars, and the creation of an AI readiness diagnostic. She co-led a national survey of nonprofits and foundations on AI use in partnership with the Stanford Institute for Human-Centered AI.

Sarah enjoys moments of great change inside organizations and across the sector, and is known for teasing order out of the creative chaos of growth and innovation. Prior to joining Project Evident, Sarah was Chief Operating Officer at Health Leads and a Managing Partner at New Profit, Inc. She earned her MBA from Harvard Business School.

Sarah led the Community IT webinar Artificial Intelligence (AI) and Ethics for Nonprofits.

Jean Westrick is the Executive Director of the Technology Association of Grantmakers, a nonprofit organization that cultivates the strategic, equitable, and innovative use of technology in philanthropy. Westrick brings two decades of experience building communities, leveraging technology, and leading innovative and programmatic strategies.

Prior to being named Executive Director of TAG, Westrick was the Director of IT Strategy and Communications at The Chicago Community Trust where she led change management efforts for the foundation’s $6M digital transformation initiative. Also, while at The Trust, Westrick directed On the Table, an award-winning engagement model designed to inspire resident action that was replicated in 30 cities nationwide.

A longtime advocate for equity in STEM education, expanding technology access and increasing science literacy, Westrick holds a Bachelor of Arts from Michigan State University and a Master of Science from DePaul University.

Carolyn Woodard is currently head of Marketing and Outreach at Community IT Innovators. She has served many roles at Community IT, from client to project manager to marketing. With over twenty years of experience in the nonprofit world, including as a nonprofit technology project manager and Director of IT at both large and small organizations, Carolyn knows the frustrations and delights of working with technology professionals, accidental techies, executives, and staff to deliver your organization’s mission and keep your IT infrastructure operating. She has a master’s degree in Nonprofit Management from Johns Hopkins University and received her undergraduate degree in English Literature from Williams College. She was happy to moderate this webinar on a nonprofit AI Framework.

Transcript

Carolyn Woodard: Thank you all for joining us at the Community IT Innovators webinar. Today, we’re going to walk through a new AI framework put together through the research of Project Evident and Technology Association for Grantmakers (TAG) based on surveys of practitioners and their own research. TAG and Project Evident have created a guideline for thinking about introducing AI tools and concepts to your nonprofit organization or philanthropy.

Today, they’re going to share the results with us and help us think about the needs AI can address, the policies our organizations need to put in place, and ethical areas where nonprofits can make a difference in the development and use of these tools.

My name is Carolyn Woodard. I am the Outreach Director for Community IT and the moderator today. And I’m very happy to hear from our experts, but first, I’m going to go over our learning objectives.

By the end of this session, you’ll

- learn the definitions of AI and generative AI, and the differences;

- understand the framework, the design principles used in the framework;

- discuss the ways AI is being used in philanthropy; individual use, organizational use and mission attainment

- and review the considerations – organizational, ethical and technical.

They have an amazing graphic they’re going to share with us that helps walk you through those different aspects of AI.

But before we begin, if you’re not familiar with Community IT, just a little bit about us. We are a 100% employee-owned, managed services provider. We provide outsourced IT support and we work exclusively with nonprofit organizations. Our mission is to help nonprofits accomplish their missions through the effective use of technology. So, we are big, big fans of what well-managed IT can do for your nonprofit.

We serve nonprofits across the U.S. We’ve been doing this for over 20 years. We are technology experts. We are consistently given an MSP 501 recognition for being a top MSP, which is an honor we received again in 2023.

I want to remind everyone that for these presentations, Community IT is vendor-agnostic. So, we only make recommendations to our clients and only based on their specific business needs. We never try to get a client into a product because we get an incentive or a benefit from that.

We do consider ourselves a best of breed IT provider, so it’s our job to know the landscape, the tools that are available, reputable and widely used, and we make recommendations on that basis for our clients based on their business needs, priorities and budget.

Introductions

You can imagine we have a lot of clients asking us about AI. So, I’m very happy for this presentation today. And now, I’m so excited to hear from our experts. So Jean, would you like to introduce yourself and the AI project at TAG?

Jean Westrick: I would be delighted. Thank you so much. Hello and welcome, everyone. I am Jean Westrick. I am the newly-appointed Executive Director for the Technology Association of Grantmakers. I’m just about closing in on my first 90 days here at TAG. And I’m really pleased to be sharing the framework for responsible adoption in philanthropy with you today.

TAG is a membership association and we focus on stewarding the strategic, innovative and equitable uses of technology in philanthropy. Our work builds on knowledge within our sector. It strengthens networks through these opportunities to bring members together and share what they’re doing and advance organizational practice. And we also provide thought leadership for the social sector and the philanthropy community writ large. I’m really excited to be here as the voice of philanthropy tech.

We have members across the globe, in North America, as well as in Europe and in the UK. We believe that technology is not only critical to executing the business of philanthropy, the work that we do every day and nonprofits, as well. Every organization is essentially a technology organization. And every organization recognizes the strategic imperative of technology in the way in which we deliver our missions. It’s often the way we connect with our constituents.

Bringing together all of these groups, we’re really thinking about ways in which we can meet the practical needs, the pragmatic needs of those folks every day, who are trying to keep the lights on and navigate these decisions with also the organizational innovations that happen as well through technology. So, I’m really pleased to be here to share a little bit about our mission and this framework. And with that, I’m going to pass it on to my co-presenter and colleague, Sarah.

Sarah Di Troia: Thanks so much, Jean. Welcome everyone. It’s a pleasure to be here in dialogue with all of you about AI. I’m Sarah Di Troia. I am Senior Advisor in Product Innovation for Project Evident. We’re a nonprofit. We are also excited about the use of technology. But for us, technology is a means to an end. And that end is stronger outcomes for communities. And so our focus is really on data and we see AI as a way to be able to gather new types of data, analyze data and make it really actionable for communities and for folks who are on the front lines of organizations and foundations, so all of our missions can be achieved.

It’s really been a delight partnering with the TAG organization over this last year developing the framework and with so many members of the TAG community. And if there are any of you who are listening that were part of our workshops, we really want to thank you so much for contributing to the development of this framework.

AI Framework Development Team

Jean Westrick: Thank you. As Sarah mentioned, this framework was the result of a lot of input from a lot of different folks. I want to just take a minute to actually recognize the AI framework team Kelly Fitzsimmons and Sarah from Project Evident, as well as Gozi Egbuonu, who’s here on the call today, and my predecessor Chantal Forster, who’s really had a lot of foresight to help kick off this project and lead this work.

This framework was based upon the input of nearly 300 practitioners, leaders and partners throughout the social sector. And it is our hope that this framework will really help folks navigate this evolving landscape of AI with some informed optimism to be able to evaluate when it’s going to be the right decision and when it’s not. At tagtech.org/ai, you’ll be able to download the framework, as well as additional resources that we’ve put out.

Ethical AI Framework Design

It’s interesting how fast this landscape has evolved. Few in the philanthropy sector were even really talking about AI a year ago. And now, it seems to be everywhere on everyone’s lips. And it only continues to evolve faster and faster, it seems.

Almost a year ago, TAG held its first webinar on AI and we’ve held a number of those since. The framework really comes out of those observations, insights and questions.

- What is AI? And why does it matter?

- How can our organization use AI to become more efficient?

- What does ethical AI look like?

- What would a policy be in order to ensure that it can happen safely and effectively within our sector?

- How do we ensure that AI doesn’t undo the decades of work that nonprofit and philanthropic communities have done to address inequities within our communities or undo decades of the justice work that has happened?

So, it’s from those questions and recognizing that this is an evolving landscape, and these decisions are difficult to travel through. We wanted to make sure that we were meeting people, where they’re at. AI is not new and Sarah will go into a little overview to differentiate between what we’re talking about here.

Mainstream tools like ChatGPT have certainly evolved the conversation really quickly and there’s an appetite to learn more and to understand how to do this in a way that meets the missions and the visions we have for our own organizations.

We can put these resources in the chat as well, that there are a number of frameworks out there already. So, the Partnership on AI’s Guidance for Safe Foundational Model Development is a model out there, Responsible AI Commitments has been put together by RI labs. There’s principles that have been developed by Microsoft and even Project Evident has a framework as well. These are all complimentary. I’ll let Sarah talk about hers if she so chooses.

But we wanted to, with this framework, provide people a starting place with some tactical guidance about how to use and experiment with AI. So Sarah, you want to talk about the design principles?

Sarah Di Troia: As Jean was saying, the world has changed dramatically in the last year since we began this project to develop a framework to help foundations be practical and tactical around their engagement with AI. At the time, about a year ago, most of the frameworks that existed were pretty high level. They were values-based frameworks, principles-based frameworks, but they weren’t really on the brass tacks. What the TAG membership was hearing, and what we were hearing from our foundation friends and Grantmaker friends, was that they really needed something that was more practical.

And so we had the benefit, the pleasure of working with a number of people in the TAG membership community. We were able to assemble a five-person steering committee that helped us design the overall project. And then we had a twenty-person design committee that worked with us over three different, two or three-hour sessions, where we worked in big Miro boards as we collaborated on what was important, what were people facing, and what were the challenges and the questions and the opportunities people really felt they were facing around AI.

The way we centered all of those conversations was using a series of design principles. What was very clear to us at the beginning is that we wanted to make sure that whatever was developed was going to center equity at its core. We wanted a very collaborative approach. This was not going to be a framework that was developed in a closet and then released. It really had to be a for us/by us approach. And Jean will talk about that a little bit more.

We wanted to make sure we had some cross-team collaboration. So, it wasn’t just foundation CTOs that were in the room, but we wanted to make sure we had some program officers, we had some practitioners for nonprofits, and we had tech leaders. Each of these groups had a mixture of perspectives, so that we had the benefit of those shared perspectives and developing framework.

We really wanted to empower technology executives to lead strategic conversations around AI inside of foundations. We really felt like there had been a disservice to technology professionals inside of foundations. They weren’t always at the strategy table. They weren’t always in those conversations. But we knew that AI was going to force a whole set of conversations inside of foundations that were profoundly strategic, both around mission attainment, as well as around culture and norms inside of the foundation. And it was really important that we wanted to make sure that technology executives weren’t just in the box of thumbs up, thumbs down the technology, but really helping to facilitate a broader conversation about strategy and implications for AI usage.

Obviously, we want to promote privacy, security and transparency. And along with community, it was really important to us that this was technology brand and product neutral.

A lot of the activity, a lot of the first movers out of the gate around AI usage in the social sector, not surprisingly was Microsoft, Salesforce, and Amazon Web Services. We could not possibly do our work without their incredible investments in technology. And yet when they engage with our sector, they only do it from the perspective of brand halo or creating a market for their product. We feel like that somewhat limits our conversation. So, we wanted to make sure that we are creating a much broader brand-neutral conversation. Let me pause there and Jean will talk about the for us/by us big tent.

Jean Westrick: Absolutely. Back in November, 2023 at the TAG Annual Conference, we held a 90-minute workshop with about 300 people in a huge ballroom. They brought in real world use cases and then used the framework to evaluate what responsible adoption would look like in their own organizations and what the steps would be in order to do that. That was an opportunity for us to get feedback about how this might work with a variety of different perspectives and use cases.

That was a very informative piece. Sarah was facilitating that and I was the incoming Executive Director at that point. I had not yet joined the TAG team. I was really heartened by the community spirit and the way in which people were generously giving their time and their experience in order to make this a useful tool.

Sarah Di Troia: It’s hard to overstate the amount of change to the framework from that workshop. I mean, it was just the level of insight and input that was given to us so generously by that community. It really dramatically shifted what the emphasis was in the framework. So, thank you, thank you, those of you who were involved.

What is Artificial Intelligence (AI)?

“Traditional AI”

Just to make sure we’re all talking about the same things. Just straight up what might be called “narrow AI,” or “traditional AI,” it’s the capacity of a computer to mimic human cognitive functions such as learning and problem-solving functions. And I’m going to give you a couple examples of what that looks like.

It has been around for decades, for 30 years, and it certainly has shaped your life as a consumer directly for probably the last 10 years at least. Everything from Netflix to Alexa to just about every way that you interact with a consumer-based company has some form of AI in order to expedite the way that they’re interacting with you or to more closely serve your needs. And in their case, that’s to sell you more product.

Generative AI

That’s a little bit different from where all of the heat and light has been since November of 2022, when ChatGPT3 was released to the public. That’s generative AI. Generative AI is the capacity of a computer system to create things that seem like they’re new, whether that’s new text, new audio, new video, new sounds, new pictures, all of it. And it uses a massive amount of data as training data, so that when somebody queries or makes a response or a request, it can create something that appears to be new. But of course, it’s based on all of the learning underneath that, all the data that’s been used for training.

Let me actually give you some examples, because that would probably make it more clear for folks. I want to talk about some common machine learning applications and then give you one example from the generative AI space.

Predictive analytics

This is something that most consumer-based companies are using. Famously, Target used predictive analytics to get a sense of which of their customers were likely to be pregnant before they were buying diapers. They wanted to understand who they were in the period of time that they were going to acquire a lot of new products. And they realized that if you bought a combination of about 27 different products, and that was through analyzing tons and tons of data, they would predict with strong accuracy that you were pregnant. Then they could offer you coupons and other ways to help gain a share of your wallet.

The way we’ve seen some nonprofits using predictive analytics is to move away from a one-size-fits-all program model to… customizing it to different subgroups within their client populations that they may not understand are actually subgroups.

Examples of Nonprofit Mission Attainment Using AI

Gemma Services, https://www.gemmaservices.org they are focused on working with young girls that need inpatient mental health support. They work with about 250 girls a year. They were interested in whether or not they could tailor their program to subgroups, but they weren’t sure what those subgroups would be. Using predictive analytics, they were able to identify, yes, there were subgroups. There were some people that elements of their program model were more important to versus less important, and they were then able to begin customizing the way that the program model was being fit to each girl who came through the program.

After a year, I think this is the 2022 data, they found that they could lower the risk score for girls and that acuity score was about whether or not you’d show back up in the emergency room or come back to the program after discharge. They lowered that almost by 100%. And they decreased the stay in their inpatient facility by just under three months.

So really, dramatic outcomes when they can move away from a one-size-fits-all program model and really focus on those predictive analytics similar to Target and when they can understand subgroups of their customers and better tailor their offerings to those customers.

Recommendation Engines

Another form of traditional or narrow form AI would be recommendation engines. You’re familiar with this with Netflix, let’s say, who is helping to make a complex decision about what you might want to watch tonight from hundreds of thousands of options into offering you ones that are more likely of interest to you based on your taste, but also the taste of subgroups that are similar to you.

An organization that is using recommendation engines is a group called WorkMoney https://workmoney.org. The challenge that they’re working on is that about 46% of Americans live in families with insufficient income to meet their basic needs.

So, they’re interested in doing two things.

- They want to connect people to basic goods and services, and get them aligned with government programs and other programs that can support the family’s economic well being.

- They also want to reconnect them into the democratic process.

Many people feel like, what does the government do for me? And so they’re trying to both connect them to services, but also get them connected to local politics to understand how tax dollars are being spent in ways that really support the way they live.

That recommendation engine is connecting clients to social services, making strong recommendations of ones that would most likely be a good fit and then supporting them in the application of those services. And then keeping them connected via text messaging to the overall democratic process.

Natural language processing

This is the technology that underpins Alexa, Hey Google, et cetera, Siri. Natural Language Processing is being used by a non-education nonprofit called Talking Points. https://talkingpts.org They are focused on how to increase that connection between families of young students and teachers. There’s a wealth of scientific data that shows that the stronger the relationship between teachers and families, the better the academic outcomes for students.

What they have done is created a much more sophisticated translation software. They can translate over 145 languages now. So, you can do real-time texting back and forth between families and teachers. And what they found after using this technology is they decreased absenteeism, particularly for marginalized populations by over 10%. And they increased academic gains in Math and English language arts for disadvantaged populations over 10%. So significant shifts there.

What they’re looking to do now is to test what does nudging look like? Because it’s not every interaction between a family and a teacher that’s going to result in a better outcome for a student. It’s more likely that it’s a less transactional interaction: so-and-so forgot their lunch; versus so-and-so had a death in the family, or so-and-so’s really struggling with math. So they’re going to experiment what it looks like when they do prompting or nudging to encourage teachers and families to have these types of communications.

So, these are all types of technology that are narrow form AI. They’ve been around for a long time. There are frankly less risks associated with these types of algorithms, because they are more narrowly construed. And they’ve been on the market for a lot longer, so we’ve had a clear understanding of how they’re working, and where some of the risks and edges are.

Generative AI Examples in Nonprofit Mission Attainment

Generative AI is, again, the newer technology that has been commercialized. We have fewer nonprofits, who are using Gen AI only because it’s incredibly expensive still.

Crisis Text Line https://www.crisistextline.org is a national text-based crisis service for people who are in crisis, similar to a crisis hotline. They were really struggling with the challenge of how to prepare volunteers. They go through a 30-hour training program, but they were noticing that some that came out of the training program were not fully getting out of the platform and really being peer counselors to folks in crisis. They lacked confidence in their own skills. So, Crisis Text Line created a simulation environment using generative AI.

They wrote their own stories because they don’t have access to the texts that go back and forth from live texters and their peer counselors; that’s protected information. So, they created similar texts based on their experience of working in those exchanges. They used that data then to train a generative AI model. And then that became a volunteer training model.

I can come into that model and each time I go in and start a new interaction with either somebody who is low risk or high risk. That’d be somebody who is at risk of self-harm, or somebody who is depressed or anxious or sad, but is not at a risk for self-harm. And as a volunteer, I can use that model to practice my text exchanging and to gain comfort.

They’re still in piloting mode with this, but their hope is that this will reduce the yield loss of folks who are going through their volunteer program, but not becoming full members of the platform to be able to support their peers in crisis.

That’s sort of a wealth of examples that you can see that’s happening across the nonprofit sector. And I’m happy to share once we get further into this, some examples from the philanthropic sector as well.

How AI is Being Used in Philanthropy

Jean Westrick: Thank you, Sarah. That’s an excellent grounding. So now, we’ve seen different applications of AI as a frame to hang our knowledge on. We’ve identified a number of ways AI is being used in philanthropy.

I would argue that this is also being used in the nonprofit sector too, in these ways.

- Individual use cases are when a staff or a stakeholder at an organization is using publicly available AI tools that may not be provisioned by the organization itself. Using ChatGPT to generate correspondence, for example, as an individual use case.

- With regards to organizational efficiencies, these are the kinds of tools that have been provisioned by your organization. They’re usually found in your tech stack or within your existing technical application. They’re used to save time by using predictive analytics and improve the efficiency of the organization. So, it could be the AI tools in Copilot in your Microsoft stack, or it could be something like turning on the AI companion in Zoom in order to take meeting transcriptions.

- Mission Attainment are tools that have been provisioned by the organization for that deeper discovery, those deeper insights to help with identifying strategies for fundraising or analyzing impact and those sorts of things.

- And then the nonprofit enablement, at TAG we speak largely to funders and we recognize that funders are in a position, and they should be in a position, to support and enable their own nonprofit partners with the adoption of AI as well.

I think this framework is applicable for both audiences, because it is a starting point and gives you considerations that could be tailored to your own organizational context.

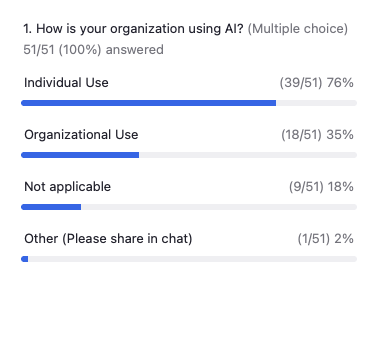

Poll: What are you using AI for at your organization?

Carolyn Woodard: Thank you for making that connection between people who are working in nonprofits and nonprofit missions and foundations and funder missions that are supporting their nonprofits. There is a lot that is definitely in common.

What are you using AI for at your organization?

So, this is multiple choice, you can fill in as many as apply.

- The first option is individual use. So, that’s what Jean was just mentioning. You or staff are using ChatGPT or other AI tools that you go out and just use on your own.

- Organizational use may be AI embedded in a tool that you use, like Teams, or it could be a specific tool that you use within your organization that uses AI.

- It could be not applicable. You’re not at an organization or it just doesn’t apply for some reason or some other way that you’re using AI.

That would encompass people whose organizations are using AI related to some of the examples that Sarah just gave us, where you’re achieving part of your mission or enabling your mission by using AI to do additional work or because you have limited resources, limiting who you’re spending those resources on using some AI tools.

And if you are doing something in that realm, if you could put that in the chat, we would love to hear more from you about what your organization is doing.

Jean Westrick: We’ve talked to hundreds of organizations and some of this is tracking what we were already hearing that most use is in the individual space. 76% responded that individual use is the way they’re using AI with 35% organizational, 18% not applicable and 2% other, which is interesting.

Carolyn Woodard: Someone says they’re feeling a little FOMO, Fear Of Missing Out on AI. I feel like we’ve talked about this in some other contexts as well with it changing so rapidly. Remember back to when Alexa or Siri was first out there and it didn’t work at all, you would ask it to do something and it completely misunderstood you?

I feel like there’s not a huge drawback to waiting. If you are still getting together how you would use this, the tools are going to be different and they’re going to be better in even just a year. So, don’t feel FOMO that it’s going to get away from you.

But if you are interested in it, starting now, coming to this webinar and using other resources to learn as much as you can about it as you get ready to use it is a good idea.

How are Nonprofits Using AI Tools?

Individual Use

Sarah Di Troia: Individual use. This is where your organization has the least amount of control. ChatGPT is available. You don’t have control over whether or not your potential grantees are using ChatGPT or Grantable. You also don’t have control whether or not your employees potentially are using that as well. Otter.ai or other transcription services, that’s a big thing that came up when we were doing that large 300-person conversation around the framework. We asked people how AI was coming into their world. Transcription services and the ability to use generative AI to generate copy. Those were two things that were coming up quite a bit.

Organization-wide Use

When we moved to organizational efficiency. Again, it’s those transcriptions and summary services in a Zoom account. So that might be unlike Firefly or Otter that somebody can just send individually. These are tools that you’re provisioning that your technology team has paid for and has turned on.

I think this is an interesting place, because right now, you have a little more control over this than you will in the future. These are new features that are likely available to you through some of your technology providers that are in your tech stack. And increasingly, there’ll be standard features that are in your tech stack that you cannot turn on or turn off.

Right now in Zoom, you can turn it on and off. AI features and grants management software, those are upsells if you’re in Fluxx or Submittable. But I would expect in the future, AI will just be a core part of the software. Deploying Microsoft Copilot, that was just raised recently. Right now, that’s a pretty significant upcharge. I think it’s like a hundred, a hundred plus per seat right now. But I would imagine that price will come down as others offer similar products. And maybe eventually, it’ll simply be a part of the core product and there’ll be something else new and shiny that is an additional charge.

Mission Attainment Use

Finally, if we look at mission attainment, this is around how you really support grant-making decisions or to find grant-making bias.

That was one of the big things that folks were interested in: how do I get better at choosing where I should be funding my money or understand where bias is happening at my grants- making selections already?

Enabling measurement, learning and evaluation (MEL), how do I do that much more broadly across my organization and have it really at the fingertips of folks who are making decisions? Can I use it to analyze a theory of change and to understand gaps and errors?

Landscape analysis. I want to do funding in a new area or even an area I know well, but can this technology be used to help me understand the landscape?

Universal internal search on prior grants and reports. I did mention earlier that I’d talk a little bit about what I’ve seen at some foundations, and the Emerson Foundation has basically gotten rid of their intranet. The internal way that employees can search for information about their company and their benefits, et cetera, that is now all being handled by generative AI. That’s Phase 1. Phase 2 is they’re moving all the grants and the grantee reports into that area. They see it as a way of being able to summarize past knowledge of the foundation very quickly, so that folks can have access to it immediately and be well equipped to be in dialogue with the community, which is really where they want their program officers to be.

Jean Westrick: That’s a great overview of the ways in which AI is being used. So, fear not if you’re feeling a bit FOMO, it’s fine to be where you’re at right now. We’re all in a space of discovery and learning, and trying to figure out what is the best way forward. And that’s why I’m excited to share this framework. Because I think that this is a great place to start.

Nonprofit/Philanthropy AI Framework

So, regardless of your usage, whether you are an individual user, an organizational mission attainment, or you’re thinking about funding a nonprofit enablement; AI adoptions in order to be responsible, need to address some important considerations as part of their decision-making process and their journey.

The framework has three buckets of considerations and we’ll go into deeper detail on each of those.

- Ethical

- Organizational

- Technical

And I will say that these sorts of considerations cannot be handled in isolation or in a vacuum. They’re an opportunity for members across your organization to come together and address these considerations within your organizational context.

And at the heart of each of these is to do it responsibly. To do it ethically is to center the needs of people and think about these considerations in alignment to your organizational values.

I cannot overstate this enough. We are on an AI journey. We’re at the very, very beginning of that journey. And this is going to be a process. This is not like a one and done, where you’ve tested it and you’ve deployed it, and it’s over. These things will get better with time. And also too, your needs will evolve with time.

So, as you think about this, everything should be human-centered. Listening to your key stakeholders throughout the considerations, make sure that you have diverse voices around there, so you’re considering the implications within your context. Thinking about an iteration around a pilot that can help bring those various stakeholders in and do that feedback and such.

The colors on this framework were selected to ensure that the maximum number of people could see it. And so recognizing that almost 5% of the population is colorblind, we selected this color palette to make sure that our technology and our tools meet the needs of the most people possible. And that’s one way you can think about it centering humans is through the accessibility lens.

Organizational Considerations: Your People

Sarah Di Troia: Great. I’ll walk through the elements of how you think about applying. There’s more detail in the document that we’ve linked to really help you get started, but as Jean was saying, everything should be human-centered.

And so we’d like you to:

- finding early adopters. We have some questions about change management. Adopting AI is no different than any other type of significant change that you are managing throughout your organization. And we’ve all certainly had to manage a lot of change over the last five years. But thinking about who are your early adopters, do you already have a group of folks, who are always on the cutting edge of technology? How do you bring those in to really be the champions of experimenting with AI and then talking about AI with their colleagues?

- Creating a strong two-way learning process. This is not something that your technology team is going to do off in a dark closet by themselves. They really have to be in strong two-way engagement with the staff.

- Incorporating staff and, where reasonable, grantees in tool selection. One of the organizations I didn’t talk about was a foster care youth organization that was using precision analytics and they had foster youth who were on their design committee. So really, thinking about human-centered design. Do you have edge users, the folks who are likely most impacted by the system? How do you have them be part of the designing of the system?

- Internal discussions to inform policy and practice. You likely already have a data governance policy and a privacy policy inside your organization. Pulling those out, dusting them off, seeing whether or not they go far enough for you based on where AI is today. They were probably written in a pre-AI time or not considering AI. And how are you circulating and engaging your team on those policies?

We encourage people to think about data governance and data privacy, like diversity, equity, inclusion and belonging work. That is not a one and done activity inside of your organization, nor should privacy and data governance be one and done either. You should consider those as an always-on education and reinforcement opportunity.

- Assess how AI will evolve or sit within your mission and then build internal communications. Again, we’re on this journey as Jean was saying; it’s not going to be static. And so thinking about what is the internal education and communication you want to be using to make sure that everyone’s staying with you on this journey around ethical considerations.

And interestingly, when we talk to people about where you notice that this is a circle graphic; you can start anywhere.

We think that either you should start on the engaging people or you start on the ethical considerations. Those are sort of equally important in terms of where you could start.

Ethical Considerations: Your Mission

We really encourage people to not try and sanction AI. AI, it’s like the internet. It is not a winning strategy to yell at the ocean and tell this tide to stop rising. That is not going to be a win, it might buy you a little bit of time. But as we already said, there’s AI that’s happening outside of your control. It’s better to engage on this initially.

Be mindful about how data should be collected and stored. Thinking about how your data is being collected, thinking about the fact that you have access to and are storing likely lots of data from communities, because that’s the data that’s coming to you through grantees. Being really mindful about how that’s being stored and how it’s being used inside of your organization.

Adapt that data governance policy, if it needs to be adapted.

Setting policy for notifying people when AI is being used. When am I talking to a human? When am I talking to a bot? Is my grant being reviewed by a bot before it’s getting to a human? The understanding of how AI is being used.

Creating the ability for folks to opt out.

Ensuring human accuracy checks. I mean, we don’t have enough time in this webinar to go through the myriad of ways we have been shown that technology in general and AI in particular can fail communities of color, marginalized communities, so making sure that you have human accuracy checks that are built into however you are using AI and enabling people to request a manual review of AI and to share back that generated output.

These are all considerations that were shared by our colleagues and prioritized by them as ways that you can think about privacy bias and transparency, and how to guard against it and responsibility and acceptable usage.

Technical Considerations: AI Tools

Jean Westrick: Technical considerations are really the last conversation that you should be having. I know I’m saying this as someone who is representing the technology space within philanthropy, but a lot of these challenges are not technical challenges per se. They are also human challenges. And that is the hardest part and the place where you should start.

When you think about the technical considerations, you want to make sure you understand the platform you’re using, and what kinds of data privacy and security and biases are built into the model. You want to also understand what are the risks in place. That is really important. I know that there’s a question up here about the Emerson collective example that Sarah used. ChatGPT uses a large language model (LLM) that scrapes a lot of data from a lot of places out there in public.

In terms of the Emerson Collective, it’s a closed large language model. So, a private LLM, that is your data only. You’re going to be able to control data much better rather than putting sensitive data on a third-party platform that can end up in the learning model.

With regards to other technical considerations, you’re going to want to work with a diverse group of folks, who can help identify low-hanging fruits, where you can adopt AI. Think about the things that are ready for automation, that are really transactional, that’s a really good place to start, as opposed to something that is really core to your mission. That’ll be a good place to experiment and understand what the edges are, and also to increase the tech influence within your staff.

With all of these tools, there is a learning curve in order to use them. And so you’re going to have to practice and you’re going to have to allow people to incorporate change in a natural way, because there is a learning curve here.

And then you want to make sure that there’s some oversight and ways in which you can evaluate that.

Just to underscore, Sarah recommended starting at the top of that circle and being able to have a conversation about what your AI vision is and centering people in that conversation, exploring those ethical considerations before you move on to tools and platforms and those sorts of things.

Carolyn Woodard: I think the good news is we are at the end and now is really the opportunity to be able to engage with questions that have been coming in and this phenomenal chat stream that’s been happening this whole time.

Jean Westrick: Yes. We recognize that this framework is really just a starting point. And then I want to underscore that you can find more information on tagtech.org/ai.

We strongly believe that our sector has a duty to balance the potential of AI with the risks and to make sure that we adopt it in alignment to our values. That is key. Our commitment to the work that we do remains centered on our missions for a more just equitable and peaceful world. The genie is out of the bottle on AI, it’s here.

So, it’s our opportunity to embrace this with an informed optimism to make sure that AI adoption is human-centered, prevents harm, and mitigates those risks.

We do it in a way that is iterative, that pays attention to the change journey that everyone’s on.

And then I think the most important question you should ask yourself is when AI isn’t the answer. Just because you buy a hammer doesn’t mean everything is a nail. And so you really have to ask yourself, is this the best way to solve this challenge? Because it may not be.

Q and A

Carolyn Woodard: We do have a couple of questions that came in that I thought were interesting.

What kind of organizational policies do you recommend, and also do you recommend having an organizational AI policy before implementing this AI framework?

Jean Westrick: Great questions. I think certainly an AI usage policy is something organizations need to take up soon. And it’s okay to draft that before you have implemented it. You may need to evolve it and revisit it as you go down the journey.

Sarah also mentioned your data governance framework and your data governance policy. With both of these things, writing the policy is not enough. You have to think about how to create that policy, create it collaboratively and then how you are going to roll it out and socialize it, so people have the training and the knowledge in order to address the policy.

Carolyn Woodard: You may already have data policies, but if they haven’t been revised in the last year, or longer, you might have a starting place already. If you ask around in your organization, you may not have to start from scratch around your data policy. You may have something in there and you add in these AI considerations to it.

Community It has an acceptable use policy template, a place to start from that’s available free on our site.

That doesn’t address all of these other policies, like who has access to your data and the different department’s data. If you’re going to start using AI to query your documents, are there some HR documents in there that aren’t supposed to be in there? You’re going to want to have policies in place for that.

How do you introduce AI to staff that might be afraid of it or have no idea where to start?

Sarah Di Troia: Don’t look at AI differently than you thought about education and evolution you wanted to have in a new organization around diversity, equity, inclusion and belonging.

Think about the comprehensive plan that you put in place, the time you gave the folks you felt like could be examples of where you wanted your culture to be, of what you wanted your norms to be, and how you involved them in the development and the championing of those changes. That has nothing to do with title, by the way. That has to do with who’s a culture carrier inside of your organization.

There are lots of different models out there around change management. But you’ve all been leading change pretty dramatically for the last five years, if not before that.

And so I encourage you to think about those early adopters. I encourage you to not think about title and to think about who holds informal authority, as well as formal authority in your organization. And what are the ways that you can get people comfortable with AI through examples and the fact that people might not understand that Netflix is a recommendation engine. But most people have used Netflix, most people have been on Amazon’s website and it’s offered them other products.

When we talk about AI, it’s not entirely foreign to folks. But it’s helping do the translation of what could a recommendation engine like Netflix mean for us inside of the problems that we’re trying to solve? And as Jean said, you do not want to be the hammer assuming that everything’s a nail.

Look at the persistent barriers or challenges we face in achieving our mission and then really understand, well, is AI something that could help us with some of these barriers? Or frankly, is there something else we could do that would be far less expensive?

A fairly easy example is, there are lots of tools that are available that use AI to support grantees on writing grant applications. Grantable is one of them. There are more and more grants management systems that are providing an AI additional tool to help you summarize grants, process grants, et cetera. If the problem that you were trying to solve is that the grant process is too onerous, the transaction costs are too high, both for grantees and for program officers, putting AI on both sides of that process is a really expensive way to solve that problem. If the problem was the grant process was too onerous, you could change the process. You didn’t have to bring AI on both sides of that problem.

So, I really encourage people to be thoughtful about it. There could be less expensive, less invasive, easier to design solutions to your challenges. Or it could be that AI would be a tremendous opportunity, but have it be a problem-focused conversation, not a technology-focused conversation. Technology’s the means to the end.

Carolyn Woodard: I love that framing of it. I think we hit these learning objectives and I hope you feel that we did too:

- learning about AI, and generative AI. Thank you so much for walking us through those differences and then giving us these amazing use cases and examples. I think that’s really helpful to help pull it all together.

- The framework design principles and the ways AI is being used in philanthropy and nonprofits.

- And that amazing graphic that helps you really think through those three different aspects and the different aspects within those aspects around using AI and where to start from. That framework is available for download on the site https://www.tagtech.org/page/AI.

Thank you, Jean and Sarah for taking us through all of this and have a wonderful rest of your day.