3 AI Impacts on Cybersecurity at Nonprofits, presented at Good Tech Fest

View Video

Listen to Podcast

Like podcasts? Find our full archive here or anywhere you listen to podcasts: search Community IT Innovators Nonprofit Technology Topics on Apple, Google, Stitcher, Pandora, and more. Or ask your smart speaker.

What are the main ways AI impacts nonprofit cybersecurity risks?

Social engineering, trust, and data risks are the three big areas where AI will have impacts on cybersecurity at nonprofits that you need to be aware of. Whether or not your organization is using AI, these are areas where hackers are definitely using AI to devise new methods of attack.

Not surprisingly, Matt Eshleman, CTO at Community IT, recommends creating policies that address the way your staff uses AI – if you haven’t updated your Acceptable Use policies recently, AI concerns are a good reason to do that. He also recommends taking an inventory of your file sharing permissions before AI surfaces something that wasn’t secured correctly. Finally, make sure your staff training is up to date, engaging, and constant. AI is creating more believable attacks that change more frequently; if your staff don’t know what to look out for you could fall for the newest scams or accidentally share sensitive data with a public AI generator.

Community IT has created an Acceptable Use of AI Tools policy template; you can download it for free. And if you are trying to update or create policies but don’t know where to start, here is a resource on Making IT Governance Work for Your Nonprofit.

What is Good Tech Fest?

Good Tech Fest is a global virtual conference on how you can responsibly use emerging technologies for impact. Whether it’s AI, web3, machine learning, or just simple mobile and application development, Good Tech Fest is the place to hear from practitioners using these technologies for impact.

Community IT is always looking for opportunities to provide nonprofits technical tips and insights from our 20+ years of serving this sector. Matt Eshleman is happy to sit down with the Good Tech Fest audience to talk about 3 AI impacts on cybersecurity at nonprofits. Check back after the webinar for the video, podcast and transcript.

As with all our webinars, these presentations are appropriate for an audience of varied IT experience.

Community IT is proudly vendor-agnostic and our webinars cover a range of topics and discussions. Webinars are never a sales pitch, always a way to share our knowledge with our community.

Presenter:

As the Chief Technology Officer at Community IT, Matthew Eshleman leads the team responsible for strategic planning, research, and implementation of the technology platforms used by nonprofit organization clients to be secure and productive. With a deep background in network infrastructure, he fundamentally understands how nonprofit tech works and interoperates both in the office and in the cloud. With extensive experience serving nonprofits, Matt also understands nonprofit culture and constraints, and has a history of implementing cost-effective and secure solutions at the enterprise level.

Matt has over 22 years of expertise in cybersecurity, IT support, team leadership, software selection and research, and client support. Matt is a frequent speaker on cybersecurity topics for nonprofits and has presented at NTEN events, the Inside NGO conference, Nonprofit Risk Management Summit and Credit Builders Alliance Symposium, LGBT MAP Finance Conference, and Tech Forward Conference. He is also the session designer and trainer for TechSoup’s Digital Security course, and our resident Cybersecurity expert

Matt holds dual degrees in Computer Science and Computer Information Systems from Eastern Mennonite University, and an MBA from the Carey School of Business at Johns Hopkins University.

He is available as a speaker on cybersecurity topics affecting nonprofits, including cyber insurance compliance, staff training, and incident response. You can view Matt’s free cybersecurity videos from past webinars here.

Matt always enjoys talking about ways cybersecurity fundamentals can keep your nonprofit safer. He was happy to be asked to give this presentation on 3 AI impacts on cybersecurity at nonprofits for Good Tech Fest.

Transcript

Matt Eshleman: Welcome to the session on Three AI Impacts on Cybersecurity in 2024. I’m Matthew Eshleman, and I’m the CTO at Community IT Innovators. I’ve been focused on providing strategic technology planning and cybersecurity guidance for nonprofits for over 20 years, and I’m looking forward to talking with you all today on this important topic.

Every nonprofit organization is at risk from cybercriminals. It doesn’t matter the size of your organization or how noble your mission is, each person is a potential target. Technology moves quickly, and the introduction and rapid adoption of AI tools means that while we as members of the nonprofit tech community have access to amazing new tools, so do the threat actors.

I’ll turn it over to our Director of Engagement, Carolyn Woodard, who will talk through our learning objectives for today.

Carolyn Woodard: Thanks, Matt, if that really was Matt.

Today, our learning objectives are by the end of the session, you will be able to

- understand the basics of AI and nonprofits,

- learn three ways AI will impact cybersecurity, social engineering, trust, and data risks,

- understand evolving cybersecurity best practices,

- and learn the role of governance, policies, and training in protecting your nonprofit from cyber threats.

Before we start, I want to let you know about Community IT. We’re doing this session today at Good Tech Fest. If you’re not familiar with us, a little bit about us. We’re a 100% employee-owned managed services provider (MSP). We provide outsourced IT support to nonprofits exclusively. Our mission is to help nonprofits accomplish their missions through the effective use of technology. We are big fans of what well-managed IT can do for your nonprofit.

We serve nonprofits across the US, and we’ve been doing this for over 20 years. We’re technology experts and we’re consistently given an MSP 501 recognition for being a top MSP, which is an honor we received again in 2023.

I want to remind everyone that for presentations, Community IT is vendor agnostic. We’ll be talking about some tools today, but we only make recommendations to our clients and only based on their specific business needs. And we never try to get a client into a product because we get an incentive or a benefit from that.

We do consider ourselves a best of breed provider. It’s our job to know the landscape, what tools are available, reputable, and widely used. And we make recommendations on that basis for our clients based on their business needs, priorities, and budget. And you can imagine we have a lot of clients and just a lot of people in our sector asking about AI.

I’m very excited to have Matt here today. He is our cybersecurity expert and he’s going to give us some of his insights on that specifically.

Our Approach to Cybersecurity

Matt Eshleman: Let’s talk a little bit about our approach to cybersecurity because I think while we want to talk about AI, it’s helpful just to understand the cybersecurity landscape.

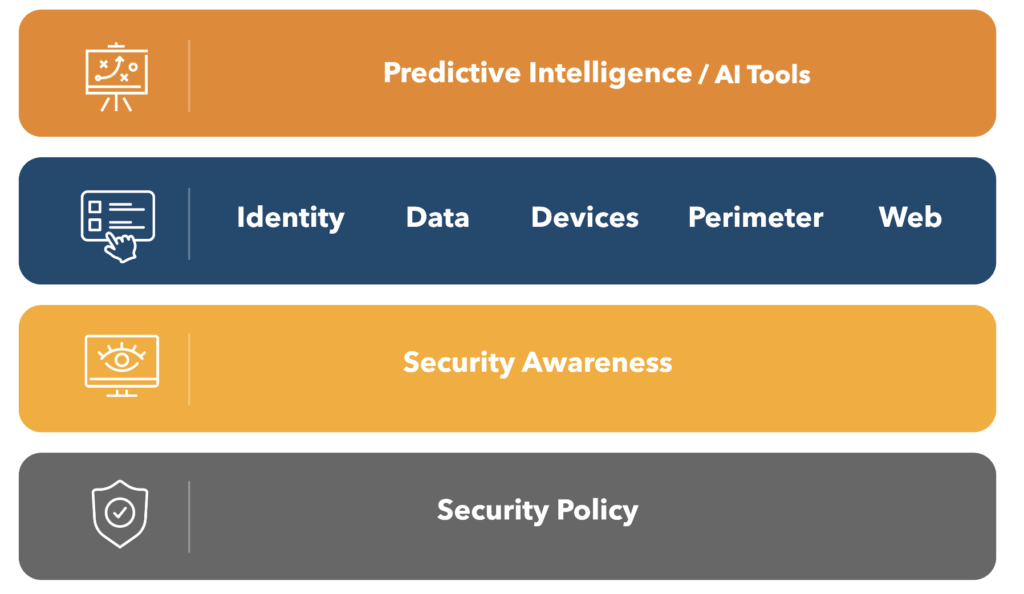

Foundational concepts such as policy continue to provide guidance for the technical solutions that can be built on top.

You’ll notice that directly on top of policy in our foundational model is security awareness training. This is something I believe in very strongly, even as a tech person. You know, I’ve got the CTO title; I love all the technology tools. I really think at the end of the day, having an engaged and informed and aware staff is really the best defense that you can have against all the cybercrime that’s out there. That’s why we put that as a foundational layer in our security model.

As Carolyn mentioned, we’re a service provider. We work with about 180, 185 different organizations. We support about 7,000 nonprofit staff. We see a lot of attacks are really initiated by people clicking on things that they shouldn’t have or getting tricked into updating payment information or buying gift cards for somebody who’s obfuscating their identity. And so even with all the technology tools that are available, they’re not perfect and things will get through. Security awareness training is important.

The layer on top of that is the technology controls around the various areas or surface area of attack.Your digital identity, the data that your organization has, the device that you use, your network perimeter. If you’re still in the office, there might be some network security tools. If you’re at home, there’s also protections that need to be put in place there.

Then the web resources and all the different applications we have.

And then finally, at the pinnacle, we have these predictive intelligence or AI tools.

Perhaps now, those tools are making their way into the layer of protection as well. But again, we view those tools as kind of the icing on the top as opposed to the foundation to build a protection of your organization around.

What is AI?

Talking about AI now specifically, this term AI has been used for many, many years, starting with the definition of the Turing test, which was introduced by Alan Turing in 1950. This session is to talk more specifically about the impacts of AI. We’re not going to do a deep dive on the history and various types of AI platforms available.

But for our purposes, I do think it’s helpful to make a distinction between what we’ve termed here as “regular AI,” or maybe “machine learning” is a better term for that. But that’s where a computer model bases analysis on vast amounts of data and can make some inference around trends or content.

Let’s take a Netflix example. Netflix knows that you’ve watched these romantic comedies, so maybe you’ll like this other romantic comedy, right? It’s doing some analysis, it knows some categorization, it knows what you’ve watched. Maybe it can suggest other things that you might like to watch.

But that’s different from the new AI models that are now turned into generative AI. AI tools are able to create new content based on prompts. We could extend our example for Netflix, so we could prompt our generative AI model that we really like the following romantic comedies. We like “When Harry Met Sally,” I like “The Wedding Singer,” and “Forgetting Sarah Marshall.” Based on those movies, please generate a pitch for a new romantic comedy movie that would appeal to me. So we can see the difference here, right?

This prompt requires the model to understand a number of things, such as the plots of the movies that were referenced. It needs to understand what the genre of romantic comedies is, what a pitch is, et cetera. It requires a lot more understanding to generate that.

I encourage you to take that prompt, see what kind of pitch you get from your Copilot or your Gemini or your ChatGPT. And it’s interesting to see the results come back from that generative analysis, where you’re providing some context and then asking for a result and some ideas from the model.

3 Impacts of AI on Cybersecurity at Nonprofits

Three impacts of AI, specifically, that’s the title of our session here today. And what I see as the CTO of a managed service provider, this AI in general is really interesting. The fun examples that we shared, the new movie pitch or the generative AI models that allow for really impressive image generation based on a set of different prompts, those are really fun and interesting to talk about and to see and to interact with.

But looking more specifically about what the AI impacts have in cybersecurity are helpful. In general, we’ve seen an immeasurable impact on our world and our work. I don’t want to underestimate just how seismic a shift these AI tools are going to have on our operation.

The speed and adoption of the technology is pretty breathtaking. For example, ChatGPT had over 100 million users in the first two months of existence, which is just phenomenal to have that type of adoption in just a two month period.

That was last year. So that’s pretty amazing. And we are now seeing that impact in the cyber world.

And these are some things that I identify as trends in terms of how it’s playing out. I think the AI impact in cyber is really enabling new scams and cons at higher impact.

We’ve seen more accurate social engineering attacks.

We’ve certainly seen deep fakes, both audio and visual.

And then the third area that we’ll talk about is around the reputational risk or data risk that’s associated with incorporating these tools and these approaches into organizations.

I: Social Engineering

Talking about social engineering, that’s the first area that I think has the most impact or the most common impact for the organizations, at least that we are working with.

As I mentioned, we are a managed service provider. We work with about 185 organizations. And every year we classify all of the different security incidents that are reported to us from our clients.

In our incident report which we just delivered, we recorded a record amount of phishing emails. That would be messages that are obfuscating the sender, trying to get somebody to take some action. We saw about a 169% increase in reported phishing messages from our customers in 2023.

And I do think that that is the result of the widespread availability of these generative AI tools to make it easier to craft tailored content to specific individuals to get them to take that action. We see it a lot in the AI tools for fundraising. “How can you generate really compelling fundraising appeals?”

I think the threat actors are using the same thing. “How can you create a compelling message to get the finance person to click on this message?” or, “How can you create a compelling message to get the operational person to take this step?”

How can you encourage or maybe trick a new hire into buying a gift card as a token of goodwill for their colleague? I do think that the increase in these generative AI tools has made these phishing messages much more effective.

Some of the things that we’ve traditionally relied on to identify this as fake, such as misspelled words or poor grammar, are just not as reliable anymore because AI tools are able to generate all of that content for us in proper English.

We also see this in the overall statistics from the FBI. They saw a year over year increase of 22% in the amount of direct dollar loss associated with cybercrime.

We’re now up to $12.5 billion, and that’s directly associated with cybercrime. And again, we see that as well as an increase in the number of compromised accounts, combined with a lack of robust financial controls, contributed to a jump in some wire fraud at the clients that we serve. The social engineering aspect of AI, I think, cannot be understated in terms of just how significant that is for organizations going about their day-to-day work.

II: Trust

The second area that I’ll term here is threats against trust. Can you believe your ears? Can you believe your eyes? How do you make decisions?

In the first example that we talked about an increase in phishing, those targeted messages, the content generation, that deepfake really goes beyond the written word.

Maybe you picked up on it, you probably picked up on it at the beginning of this session, but my introduction, it wasn’t me. That was an AI voice clone that I created for free in less than 10 minutes. And it’s not perfect, but the fact that you can get a pretty good approximation of somebody’s voice with low effort is pretty jarring. And as somebody who has lots of podcast content out there, lots of webinars, my voice, my identity, that’s all available for these tools to be used to ingest and to get a more accurate model of my delivery, my tone, my mannerisms. And that is concerning.

For what I could create for free in 10 minutes, if you went through the whole model and followed the prompts to improve it to get all the nuance, we could have had a much more effective tool. And we see that these types of attacks are already occurring.

More sophisticated examples are in use already with video. There’s an example from a multinational firm with an office in Hong Kong that lost $25 million due to deep fake video to verify a transaction.

The theme that we see in these attacks is they establish trust. There was probably a compromised account involved. They were having some back-and-forth interaction. And then the CFO was like, okay, well, I need to have a meeting to verify this conversation. Okay, no problem.

This is a well-resourced attack. And we can see through one video call, they authorize the transaction, and $25 million is out the door.

We have contemporary examples of this kind of threat. This isn’t just sci-fi. It’s not just in a movie. These are things that are actually occurring today. Anyone with a computer and an internet connection can make convincing fake audio and video. For a lot of these AI services, the race is on really to capture as many accounts, to get as many users enrolled in their platform. And there isn’t a very high bar to entry. Like I said, I didn’t have to put in a credit card. I just created an account and I was able to do this initial model with very little barriers.

There’s lots of tools out there. They’re all trying to make their impact, build their business case. Right now that is largely driven by how many users they have signed up. These tools are out there, they’re free, and they’re easy to use and moderately good.

We’ve also seen social media videos. Maybe famous people having the AI voiceover can be entertaining. It’s also creating a crisis of trust in what you can and cannot believe. As we’re getting into our political season, I know this is a very big area of concern for many organizations and institutions. You know, if you see a candidate say something outrageous, well, maybe it’s them or maybe not. Have a candidate say something thoughtful and maybe align with your views? Well, that might not be them either.

Having a well-defined process from a cybersecurity perspective for organizations to have established policy processes,

- tools in place to verify requests,

- demonstrate legitimacy,

- and confirm the request is going to be really important

A lot of the hallmarks that we’ve relied on: call somebody on the phone, have a screen sharing session, we may not be able to rely on them in the same way anymore.

As a managed service provider, we have existing processes in place for dealing with clients that lose their password or lose their phone and need their MFA reset or an upgrade. They need access to a different directory or new permissions.

We have processes in place to verify and confirm those requests, but we’re going to need to continue to update and evolve those processes and technology solutions as well to ensure that we are keeping ahead of what the threat actors are doing.

III Reputation/Data Risks

What’s titled here as the reputational risk, perhaps also could be focused more specifically on data. For most nonprofits, our experience is that these really sophisticated, targeted attacks are relatively rare. They’re opportunistic from the perspective of threat actors.

But there is a relatively high degree of loss that’s associated just by end user behavior. You sent an attachment to the wrong email address. You left your laptop that had your organization’s strategic plan in a taxi, right? These are things that while we may not traditionally think of them as cybersecurity attacks, they do represent loss and data loss to an organization.

In addition to unintentional user behavior, that really needs to extend to widely available, really powerful AI tools that are free to access and maybe don’t have the same licensing and usage expectations that we have.

This is a shift from those previous two examples that were framed as threats from the outside. AI introduces more opportunities for errors and unintentional or perhaps intentional threats against the organization’s data.

I’ve often joked that new technology just helps you make bad decisions faster, and to some extent, that’s true.

If the organization doesn’t have good policy, good governance, good procedures, implementing new AI solutions on top of a creaky IT foundation is just going to accelerate and exacerbate fundamental problems that exist within the organization. I am really encouraged by the overall thoughtful approach that the nonprofit and philanthropy sector has taken.

There are lots of great resources that are available in terms of thoughtful and intentional AI adoption guides.

TAG has a really great resource that I would encourage folks to review. We did a webinar with them to go through the tool. I see NTEN has just published their AI adoption guide.

There are really thoughtful and intentional resources out there to help support organizations as they go through this process. However, that intentionality really needs to permeate throughout the entire organization, not just the executive directors or the people at the top, but all staff, because having one or several staffers using ungoverned AI tools is going to have an impact in the entire organization.

As a specific example: enabling Microsoft Copilot for Nonprofits. If you’re a Microsoft 365 customer, if you’re enabling Copilot and assigning licenses to staff, that may cause staff to discover data that was once hidden by obscurity but is now available through the tool, since Copilot permissions follow the user.

And if you were operating on the model of security through obscurity, AI tools are going to lift the veil on that. We also see that in terms of the widespread adoption of AI tools at an organization through the endpoint management that we do, we can see where folks are going from their web traffic. And I would say probably 80% of all endpoints (users) are going to AI tools at this point. And they’re not going to approved organizational AI tools. They’re going to whatever tools they happen to find or use or get advertised to them.

The risk of adding AI tools into an organization without really being intentional about what they have access to, how you’re going to manage them, what data they have, and the potential biases that are incorporated into the models, can be a significant risk to data integrity problems for the organization.

The last point here around false model output moves from potential to practical.

We’re using some AI tools in our system, but we don’t have a good sense of how it works, into the conceptual risk, related to data poisoning attacks – sending your private data into a public language learning model. And so again, I think there are additional risks associated with just interacting with these models or relying on the output from these models to incorporate in your work product.

A friend of mine works in international health and needs to write a lot of grants and was kind of experimenting with some of these AI tools to help with a grant application.

The written content of the grant application was great, but whenever he was going through and verifying all of the surveys or resources that were included as part of the model, he found that they were all hallucinated. The underlying resources that were used to support his application didn’t exist at all. And if he hadn’t gone through and done that due diligence, he may have submitted something that wasn’t fully vetted.

There’s this risk to the data output that we’re receiving from the models. And verifying or having a process in place to verify this is really important.

All of these areas, and probably more, can be overwhelming when we think about how we protect our organization from all of these new or additional and expanding risks. Unfortunately, what we’re seeing in the data amongst our clients is the amount and velocity of cybersecurity attacks is only increasing over time, and we’re still playing catch up. It’s not a question of if, but it’s a question of when your organization is going to have a data breach, or a security incident.

Actions to Take Now

Let’s talk about some ways that we can actually protect and address the ever-increasing cyber threats that exist. As I like to say, and we saw in our initial model, establishing and reviewing existing policies is absolutely critical because it helps the organization make decisions about the technology solutions or the approach that you are going to take once you have an agreed upon policy framework.

- How does your organization handle BYOD?

- How do you handle the use of cloud systems?

- How do you evaluate vendors that you use?

- Do you have any formal compliance standards that you need to follow?

All of these questions, once you talk through and agree upon and organize in the policy, can help make the selection and implementation of technology tools, cybersecurity solutions, or what you need to implement a lot easier.

If you have not already had a formal cybersecurity training program in place, I think that’s a great place to start.

We can have all of the fancy technology tools in place, the MDR, the XDR, the alphabet soup of cybersecurity protections, but unless you engage and train and educate your staff, something’s going to get through. We’re reliant upon our end users to make good decisions around how they respond.

Do you have a good culture in your organization? Do people feel free to reach out to IT with questions?Or is it adversarial and they feel like they’re talked down on if they submit a support ticket with a question? Have a good security awareness training program that includes testing, fake phishing messages, and identify staff that might need additional training or resources. Have a cybersecurity training program.

We don’t just do one monolithic training once a year. I’m a big believer in having shorter but more frequent training. A quarterly basis is where we’re at now. We have some organizations that are looking for training on a monthly basis.

But just that 10, 15 minute time to think about or to get educated on some new topic is really important and provides a tremendous amount of value.

If you’re looking at adopting AI tools, taking some time to be intentional around reviewing the security roles and access permissions is a great place to start.

As I mentioned, specifically with Microsoft Copilot, I find it fantastic. It’s really great. It’s been a great resource to help speed some content generation along for me. But it’s also important to understand the permission model and understand that Copilot has access to everything that the assigned user does.

But if your Finance and Operations folder in SharePoint is not restricted to just the staff who need to have access, then that means other folks who get licensing may get access to that data.

We had a staff meeting, and one of the questions was, when are the staff birthdays? And one of my colleagues was in Copilot, and asked it “When are staff birthdays?”

And it dug up some document that we had used back in 2007 where we had a list of staff birthdays. That’s what Copilot surfaced. It wasn’t something that we were initially looking for. We didn’t even realize that it still existed, but it was a really clear example of how these tools work. They’ll surface information that maybe you didn’t realize was there or didn’t realize that staff had access to.

These final two recommendations around implementing, you’ll see around

- email security solutions

- and managed cloud security.

As I mentioned, we had an increase of over almost 170% in spearfishing. And for small to mid-sized organizations, if you’re between 15 and 500 staff, what we see is the most likely risk to your organization is going to be coming through email.

Investing and reviewing your email security tools is a good return on investment because it gives you protection against the most likely attacks. That’s where we see the most of those attacks originate. Reviewing and making sure that you’ve got a good tool in place is really important in reducing the risk associated with the increasing volume and sophisticated language around cybersecurity attacks.

The final recommendation is around managed cloud security. We haven’t talked about this in the vein of AI, but it ties in with what we see as the most common risk to organizations in terms of their impact. It starts off with spearfishing and then moves to compromising your digital identity.

So, tools that are focused on monitoring and alerting on suspicious sign-ins, unusual application registrations, that’s really an important area. We see a lot of compromised digital accounts, much more than we see virus or malicious activity on endpoints.

I think this is a space that’s still catching up in terms of tools that are able to do this and do this effectively. But most organizations moved, if not entirely, mostly into the cloud and using cloud applications. Understanding all the different things that a digital identity gets you access to and building a good protection program around that is really important to provide protection for your organization.

Q and A

Carolyn Woodard:

Do you have any specific feedback on cautions with the use of Zoom AI companion?

This person says they had a negative experience with an employee inadvertently using Autopilot about a year ago. They’re cautious now about Zoom AI companion. Do you have anything specific on that?

Matt Eshleman: I’ll probably give the consulting answer, which is it depends on what’s your policy around it. For a lot of organizations, the choice is not necessarily, are we going to proactively adopt this AI tool or that AI tool? But, now all these AI tools are getting pushed into applications that we’re already using, and how do we respond? Are we able to turn them off or on, and how do we adopt them in an intentional way?

I think probably the first question to ask is how are you going to use these tools?

It was interesting at the Microsoft training I went to about Copilot, the trainer was like, you should just turn this on everything. Every meeting you have, it should all be recorded in Copilot, and these are all work settings, and it should all be open.

There was a lot of pushback from the other MSPs saying, hey, sometimes I want to have private conversations, and I don’t necessarily want some AI bot recording and transcribing and interpreting my every word. It’s still very new, even though the Turing test was passed in 2014. I had AI classes in college in 2000. These tools are still relatively immature.

ChatGBT just got rolling a year or two ago, and I think the adoption questions are still new, the reliability of the models are still evolving, and I think it’s important to still have pretty tight control over how you’re handling the output from the various systems to verify whether it transcribed correctly. We’re talking about the right thing, and I think intentional adoption and review of all output is important.

That’s something I would probably put in your AI policy. We use these, here’s some tools, here’s how we use it, here’s things we don’t want to do.

Carolyn Woodard: Thank you so much for that, Matt. Someone also responded in the chat that it has to do with community. So if everyone in the meeting agrees that you’re going to use the transcription, you can use AI transcription. Just having that transparency I think is important.

We got another question, about email security. Are there solutions to avoid spoofing of display names?

Matt Eshleman: I think the answer there is unfortunately no, and part of that is due to just the open nature of how email works. You can, as a sender, define any name that you want in terms of sending messages. It’s a pretty open system.

There are new or additional technologies that are now being incorporated into email beyond what are just called SPF or sender policy framework to DCAM where senders can sign messages to verify their authenticity and DMARC which is saying, if it doesn’t come from this address, it’s not us. There are some additional technology tools that can be put in place to help identify the sending or the sender of those messages.

But again, that’s where third-party spam tools augment some of this capacity. You can flag in those tools that, here are some of our top executive staff and we want to pay special attention if messages appear like they’re coming from our executive director or the finance person.

And again, maybe both are true. There are some technology solutions, but it’s also just the open nature of the email as a platform.

Carolyn Woodard:

Are there books or resources that you recommend to boards and staff to help them understand the functions and risks?

Someone in the chat recommends Ethan Mollick’s “Co-Intelligence” book, but do you have other sources or anything like that?

Matt Eshleman: Yeah, that is a good question. I think there are lots of deep thinkers that are really spending a lot of time being intentional around this top concept. The speaker at the TAG Conference, who talked about AI adoption, I thought was really helpful to put some perspective on the purpose of AI and how we are to use it.

Carolyn Woodard: His name was Jaron Lanier, and you can find his books and some of his speaking at his website, jaronlanier.com.

I want to thank everybody who came to our session. We really appreciate it.

Our learning objectives, which Matt, you did a good job of hitting, are to understand the basics, learn three ways that AI will impact cybersecurity. That was just so interesting thinking about trust and social engineering and being able to believe your eyes and ears. Understand evolving cybersecurity best practices and learn the role of governance policies and training and protecting your nonprofit from AI attacks.

Matt Eshleman: Thank you.